The 5 Biggest Limitations of Large Language Models (LLMs) in 2025 and How Scientists are Solving Them

Table of Contents (TOC)

- Introduction: The Era of LLM Reality Checks

- Limitation 1: Hallucinations and Factual Inconsistency

- The Solution: Retrieval-Augmented Generation (RAG)

- Limitation 2: Context Window Constraints and "Forgetting"

- The Solution: Infinite Context and Memory Agents

- Limitation 3: Lack of True Reasoning and Planning

- The Solution: Chain-of-Thought (CoT) and Self-Correction

- Limitation 4: High Cost and Inefficiency (Inference Problem)

- The Solution: MoE and Quantization Techniques

- Limitation 5: Data Privacy, Bias, and Ethical Risks

- The Solution: Differential Privacy and Synthetic Data

- Conclusion: Moving Towards Competent AGI

1. Introduction: The Era of LLM Reality Checks

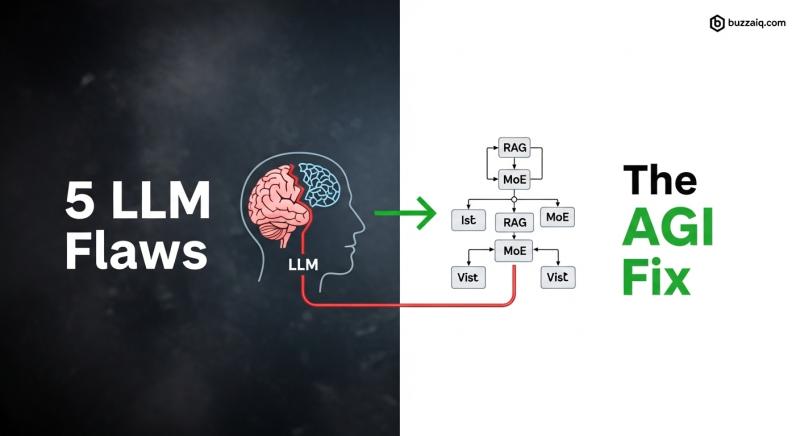

Large Language Models (LLMs) like GPT-4, Claude, and Gemini have reshaped human-computer interaction. However, as we move through 2025, the initial hype has been replaced by a grounded understanding of their flaws. LLMs are extraordinary prediction engines but are fundamentally limited by their architecture and training data. Overcoming these core five limitations is the central challenge in the journey toward Artificial General Intelligence (AGI). Fortunately, AI scientists are deploying powerful, new techniques to transform these pattern-matching models into more reliable, intelligent agents.

2. Limitation 1: Hallucinations and Factual Inconsistency

This is arguably the most common and damaging flaw. LLMs, in their quest to predict the next most probable word, often generate highly confident but entirely false or misleading information—known as hallucinations. They do not "know" facts; they synthesize patterns that sound like facts.

- The Solution: Retrieval-Augmented Generation (RAG)

- To combat this, the industry has widely adopted RAG. RAG connects the LLM to an external, up-to-date, verified knowledge base (like a private database or the real-time internet). The process works in three steps:

- The user asks a question.

- The system retrieves relevant, factual documents from the knowledge base.

- The LLM generates a response anchored exclusively by the retrieved, verified text, drastically reducing hallucinations.

3. Limitation 2: Context Window Constraints and "Forgetting"

Every LLM operates within a limited context window (the amount of information it can "remember" during a conversation). Once the dialogue exceeds this limit (e.g., $100,000$ tokens), the model "forgets" the start of the conversation, leading to incoherent or irrelevant replies.

- The Solution: Infinite Context and Memory Agents

- Researchers are moving beyond fixed context limits using two primary methods:

- Memory Agents: Dedicated systems that summarize old conversations, extract key facts, and pass only the summary back into the next prompt, preserving the core context indefinitely.

- Advanced Compression: Techniques like Attention Sink and specialized compression algorithms allow models to effectively manage and prioritize important tokens, pushing the practical context limit to near-"infinite" lengths.

4. Limitation 3: Lack of True Reasoning and Planning

LLMs struggle with complex, multi-step tasks that require planning, reflection, and error correction (System 2 thinking). They execute instructions linearly without checking the validity of intermediate steps.

- The Solution: Chain-of-Thought (CoT) and Self-Correction

- This is being addressed by pushing the model to "show its work":

- CoT Prompting: Asking the model to reason step-by-step before arriving at a final answer (e.g., "Think step-by-step and then solve"). This significantly improves accuracy in math and logic.

- Self-Correction: Advanced agents use a model to generate an answer, then use a second verification model (or the original model with a new prompt) to check the answer against external rules or evidence, iterating until the result is optimized.

5. Limitation 4: High Cost and Inefficiency (Inference Problem)

Running large, high-performing models (like GPT-4) requires enormous computational power—especially during inference (generating an answer). This makes widespread, real-time deployment prohibitively expensive for most enterprises.

- The Solution: MoE and Quantization Techniques

- The industry is focusing on making models faster and cheaper:

- Mixture of Experts (MoE): This architecture (used by models like Mixtral) activates only a small subset of the model's parameters (experts) for any given query, drastically reducing the required computation time and cost while maintaining high performance.

- Quantization: A method that reduces the precision of the weights in the model (e.g., from 32-bit to 4-bit numbers) without significant performance loss, making the models much smaller and faster to run on standard hardware.

6. Limitation 5: Data Privacy, Bias, and Ethical Risks

LLMs are trained on massive, unfiltered public datasets, meaning they can inadvertently encode societal biases and expose private information. Their opaque nature makes it difficult to audit their ethical safety.

- The Solution: Differential Privacy and Synthetic Data

- Addressing this involves changes to the training pipeline:

- Differential Privacy (DP): A technique where noise is mathematically introduced into the training data to prevent the model from memorizing and reproducing specific, sensitive data points.

- Synthetic Data Generation: Training models primarily on data that is algorithmically generated, carefully balanced, and free of real-world private information or historical biases, creating a cleaner foundation for learning.

7. Conclusion: Moving Towards Competent AGI

In 2025, LLMs are no longer just technological novelties; they are essential tools whose limitations must be understood and mitigated. The solutions being deployed—from RAG for facts to MoE for efficiency and CoT for reasoning—are collectively shifting LLMs from simple pattern-matchers toward competent, reliable, and specialized AI agents. This continuous cycle of identifying flaws and engineering architectural solutions is the critical path toward achieving true Artificial General Intelligence.

| 1. What is the main difference between LLMs and AGI? |

| Answer: LLMs are Narrow AI; they excel at specific language tasks based on pattern recognition. AGI (Artificial General Intelligence) is General AI; it can understand, learn, and apply knowledge across any task, exhibiting human-level reasoning. |

| 2. How does RAG eliminate LLM hallucinations? |

| Answer: RAG anchors the LLM's response to facts retrieved from a verified, external database instead of letting the model rely solely on its internal, potentially flawed, training data patterns. |

| 3. Why is the Mixture of Experts (MoE) architecture important? |

| Answer: MoE dramatically lowers the cost and time of running large LLMs (inference) by only activating a small, specialized portion of the model's parameters for each specific query. |